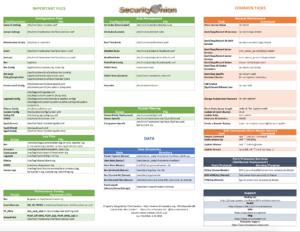

I’ve been a Security Onion user for a long time and recommend it to people looking for a pre-built sensor platform. I recently put together a Security Onion cheat sheet that highlights important information that will help you use, configure, and customize your installation.

** Update 4/23/2018: Wes Lambert from Security Onion Solutions updated this cheat sheet in accordance with the latest SO version that includes the Elastic stack. You can download the updated cheat sheet directly from the Security Onion wiki:

Download the Security Onion Cheat Sheet

Special thanks to Doug Burks, Phil Plantamura, and Wes Lambert for reviewing this and providing valuable input. Enjoy!